Every online business owner knows that search engine optimization (SEO) is key to achieving success when it comes to being ranked first on the Search Engines Results Pages (SERPs).

However, when people talk about SEO, they instantly think about the content optimization and the keywords they can use to create compelling content that will draw in readers by the bucketload.

One thing that people ignore is the fact that your blog or website has to be technically optimized in order for search engines to crawl the site in search of content and keywords and also to provide a great user experience across all devices.

Think of it as a car that requires to be technically fit before it can move even when it has a full tank of fuel.

If your website or blog has technical errors and obstacles, then the user experience will be affected, which will lower your rank.

Sometimes, even the search engine crawlers will not be able to access the content, which only serves to ruin your ranking even more.

This article takes a deep dive into technical SEO.

The first section is to help you understand technical SEO since it is a topic that is little-known by most blog and website owners.

The second section is a practical approach to technical SEO, which will help you know how you can optimize your blog or website in this respect.

Table of Contents

Section 1 – Understanding Technical SEO

- Technical SEO is a Must, Not a Maybe

- The Evolution of Technical SEO

- A new dispensation: Site, Search and Content Optimization

- The 6 Hats of SEO

- Technical SEO has become more important today than it ever was

Section 2 – How to perform Technical SEO – 15 tips

- Optimize the page speed

- Make your site mobile-friendly

- Track and fix errors

- Optimize the site architecture

- Optimize images

- Remove duplicate content

- Create structured data

- Check your sitemap

- Fix Error 404

- Fix availability errors

- Check for browser compatibility

- Fix security issues

- Optimize your site for international audiences

- Use canonicalization

- Register for Webmaster Tools

Section 1 – Understanding Technical SEO

Technical SEO is a Must, Not a Maybe

Did you know that technical SEO is a cornerstone required to build a successful optimization strategy?

In this article, you will learn why technical SEO has become more important today than ever before.

SEO is the most dynamic a fast-expanding marketing channel.

The growth of SEO has been tremendous over the last decade.

Through the era of the highly fluid algorithm evolution, SEO has always been the cornerstone of a successful digital marketing strategy – after all, 51% of all online traffic starts from an organic search on the search engines.

SEO is a necessity and not an option

However, in this increasingly competitive digital marketing arena, SEO requires new approaches and skills for it to be successful.

The focal point of search has changed drastically and become decentralized because people are using their mobile devices for browsing and the search has been integrated with Google Assistant and Voice Search.

This means that the traditional SERPs viewed with desktops and laptops are long gone, and have been replaced by dynamic and visual SERPs accessed from everywhere using mobile devices.

This has had a great impact on businesses all over the world, with SEO becoming more of a collaborative task that requires several techniques to work hand in hand to achieve the best results.

At the center of all these collaborations lies technical SEO, as a foundation on which a successful marketing strategy is built.

The Evolution of Technical SEO

In order to understand how critical technical SEO is, you must understand how it evolved to what it is today.

All roads of SEO as we know it today began with technical SEO and it is just the skills being used that have changed, as you can see below.

- Early 2000’s – Web development was closely linked to technical SEO. The most important ranking factor was making the web code to be indexable and highly crawlable.

- Mid 2000’s – Black Hat tactics were used to manipulate rankings in the SERPs. Keyword stuffing and link buying was the way to win in SEO. Google’s algorithms were unable to keep up with the plethora of ranking manipulation techniques being used at the time.

- Late 2000’s – Google started to catch up and Black Hat SEO slowly became obsolete. SEO became mainstream and marketers had to create new strategies to rank better.

- Early 2010’s – Google lays down the hammer on low-quality backlinks and slim content, and hands out a wide range of penalties.

- Mid 2010’s – Content and SEO begin to come together; SEO is now about delivering the best user experience. Creativity becomes as important as technical knowledge. SEO professionals now start collaborating with video and social media teams.

- 2015-16 – The SERPs change completely – content discovery becomes fragmented across various platforms and devices.

- 2017 – Digital assistants and voice search become hugely important and the use of mobile to browse the Internet surpasses the desktop.

- 2018 – 2020 – Search goes all over the place. The Internet of Things (IoT) soars and SEO must be performed for every device on every platform.

SEO has always been comprised of techniques to drive high-quality converting traffic through organic search.

The main objective of achieving a high rank on organic search has changed drastically since the early days when technical SEO was supreme.

The ability of search engine algorithms to crawl and index a website has remained the foremost consideration when designing an SEO strategy.

In the beginning, Content was secondary; a channel that used keywords to boost rankings. Over time, link building gained ground as Google started considering links to evaluate and rank content.

The ultimate goal of marketers has never changed – bringing in high-quality traffic to a website or blog.

As a result, there has always been a cat and mouse game, with marketers doing everything to leverage content to gain high rankings on the SERPs.

When Google discovered keyword cloaking was being used to manipulate rankings, and subsequently put a stop to the practice, black hat SEO consultants started buying links to manipulate the rankings once more.

Google came up with Panda and Penguin algorithm updates, which put an end to those fishy tactics.

People even started wondering whether SEO was dead. However, this failed to address one key point.

The point was – as long as search was the main way for people to discover information on the Internet, SEO was always going to be necessary.

With modern SEO practices, the issue of using murky SEO tactics is a distant memory. SEO has now converged with content marketing.

Today, the industry has grown strong and the best SEO techniques are being rewarded by being ranked high on the SERPs.

Due to this evolution, SEO has stopped being a fully rational discipline and evolved into a well-rounded creative content creation technique.

This has changed how SEO is done, with collaborations becoming more important as different digital marketing disciplines came together.

Technical SEO has now become a discipline for creating the best search engine practices and has no room for manipulation.

Technical SEO has now become compliant with the requirements for proper recognition by the search engine spiders, thereby boosting ranking.

There are four main critical areas for achieving the best technical SEO performance:

- Content – making sure that the content on the site can be found and indexed, especially through the use of log file analysis to understand their structure and access patterns so that the spiders can access the elements of the content.

- Structure – coming up with a URL structure and site hierarchy that enables users and search engines to navigate relevant content. The structure should also embrace a great flow of internal link equity throughout all the pages of the site or blog.

- Conversion – checking on any blockages that may hinder users from navigating through the website or blog and resolving them properly.

- Performance – When the above three aspects have been dealt with properly, there will be an improvement in the performance of the site. Technical SEO is a lot more than simple housekeeping of the site.

Given what needs to be done to rank better on the SERPs, many have questioned whether the term “SEO” fully embraces what is being done today,

Let us examine this a little more.

A new dispensation: Site, Search and Content Optimization

A mentioned above, there are those who argue whether the term “SEO” truly embraces the techniques being used to boost ranking on the search engines.

This is because the techniques have expanded to include conversion rate optimization, user experience, and content marketing.

The techniques being used today are targeted at:

- Ensuring accessibility of content in all major social networks and search engines

- Optimizing sites for the best user experience

- Creating engaging content to capture the attention of audiences across several digital marketing channels

According to a study done by BrightEdge, only 3% of marketers believe that content and SEO can be separated into two different unique entities.

SEO can today be described as a new dispensation for site, search, and content optimization. Search engines have evolved to be more powerful tools compared to what they used to be when the goal was to appear in the first 10 blue links on the first page of the SERPs.

Today, the goal of SEO is to get a website or blog in front of target audiences, wherever they are, be it on the search engines, YouTube, or social media networks.

The content that people are consuming today is vast and varied, and you have to optimize for all of them.

When there is a new technological trend, technical SEO experts are always at the front when it comes to innovation.

Recent industry developments can attest to this fact.

Brands are now expected to make sure that their web presence achieves the best standards to keep up with today’s consumers, thanks to the fact that Accelerated Mobile Pages (AMP) and Progressive Web Apps (PWAs) have become central to user experience.

Becoming “mobile-first” is crucial to how users browse the site, but it also requires some technical know-how.

PWAs are now being incorporated into Google Chrome which shows that mobile-first is something that all digital businesses should adopt.

Brands are now required to look beyond the SERPs and think of new ways in which their content will be discovered by their target customers.

It is now crucial to adopt a new approach towards SEO to leverage the upcoming consumer trends.

This means including traditional SEO techniques with site experience optimization.

Technical SEO is very important when it comes to creating the best user experience on a website or blog.

The 6 Hats of SEO

Search Engine Optimization can never, and has never, existed in a vacuum. It has always been about collaboration since the days when it was coined, to this very day.

It is therefore important that you know about the frameworks that bring together the specialist skills that are necessary for today’s organic marketing campaigns.

The most well-known hats in SEO are Black Hat and White Hat, and there is the occasional mention of the Grey Hat.

Edwards de Bono came up with 6 different hast that add a better structure and understanding of collaboration within the SEO industry.

Each hat is determined by a different thought and approach to SEO and has unique functions aimed at delivering successful results.

These could reflect different mindsets by an individual, different individuals, or different departments in an organization.

The main aim is to improve the collaboration required to succeed in organic marketing campaigns, and also remove the risk of abuse by approaching every obstacle from a different angle before proceeding.

-

The White Hat

This is a term that most SEO professionals know very well. The White Hat mindset is based on facts, data points, and statistics. This is the main objective of approaching any SEO challenge.

Who wears the White Hat?

Analytics specialists and Data analysts belong to this category.

Why is it important to SEO?

Starting a discussion, based purely on data focuses everybody’s attention on the truths of cross-channel performance.

Data that has no context is meaningless – this approach alone is not enough to address the needs of the customers.

-

The Yellow Hat

The Yellow Hat approach embraces optimism, focusing mainly on the benefits that a marketing or SEO strategy can bring to brands and their target consumers.

Who wears the Yellow Hat?

Everybody embraces optimism, so this is a mindset that is common to many SEO specialists. The main objective here is to maintain some form of structure that benefits the brands and consumers at the same time.

Why is it important to SEO?

People always have a lot of ideas when they are thinking of marketing their products and services. Some of them are discarded even before they have achieved their full potential. It is important to have an alternative view to exploring the full potential of an idea, even if it means that only a few of its components can work.

-

The Red Hat

The Red Hat approach considers emotions and feelings and is usually driven by a gut feeling about an idea. The benefit of this approach is that it reduces the tendency for SEO professionals to be overly rational and depend too much on data-driven solutions.

Who wears the Red Hat?

This is ideal for someone who works closely with the target audience, and one who thinks a lot about audience data when making decisions.

Why is it important to SEO?

First impressions matter when you are fighting to regain consumer attention to your products and services. Content marketing campaigns rely on getting first impressions right, and it is reasonable for one to rely on the gut instinct at these times.

-

The Green Hat

The Green Hat mindset embraces spontaneity and creativity in SEO. This helps tackle issues from a new perspective. Where others may see a challenge, the Green Hat sees a new opportunity.

Who wears the Green Hat?

Although everyone has a creative streak in them, the Green Hat has to be comfortable about sharing their ideas with a group, even if some of them bounce hard.

Why is it important to SEO?

Best practices can only take your SEO campaign so far. These best practices simply level the playing field. New ideas are what shake things up and make a difference. There are very many avenues that areas yet to be explored when it comes to SEO. The Green Hat is crucial to bringing innovation.

-

The Blue Hat

The Blue Hat looks at the big picture and brings all elements together and creates a powerful SEO marketing campaign.

Who wears the Blue Hat?

This is the person who acts as a project manager or leader and helps people keep their focus.

Why is it important to SEO?

This role is crucial since SEO in the modern world is a set of diverse disciplines. In order to have the best SEO campaign, the various departments or aspects have to work together on an ongoing basis. The Blue Hat is the glue that keeps things working smoothly across the various departments.

-

The Black Hat

The Black Hat is the black sheep of the SEO family. We have included this hat as means of showing that it is an abomination in the industry. These are people with a “devil’s advocate” approach to SEO.

Who wears the Black Hat?

Nobody openly wears the Black Hat in today’s SEO arena. However, when you are outsourcing your SEO, you should be aware of people who offer SEO solutions that have little or no transparency.

Apart from the Black Hat, all the other hats that we have mentioned above offer a wide range of benefits for your business.

The number of hats that one can wear is optional. You can wear one, or wear a combination of several.

You just need to understand where you are coming from when you undertake technical SEO and any other optimization task for your business.

The benefits include:

- Avenues of learning new skills through observation and practical experience.

- Chances to add more digital marketing functions in developing a successful SEO campaign.

- A centralized way of approaching SEO is by incorporating the expertise offered by different professionals in the field.

- Avenues to include several new digital functions in the SEO strategy.

Technical SEO has become more important today than it ever was

The huge evolution of SEO from what it was at its inception requires a different application of technical skills.

SEO has become a much more sophisticated and relevant digital channel that has outgrown the original description of “Search Engine Optimization”.

The basic principles of organic search are still intact, and technical SEO has become a critical process that drives the performance of websites across all devices.

Technical SEO specialists are leading the technological innovations that make this possible. With the advent of digital assistants, Progressive Web Apps, and voice search, technical SEO is now more important than before.

There is a need to be technically able to create a website that will perform well with the search engine spiders.

Where and how you apply this knowledge is the driver of technical success.

You should primarily focus on optimizing:

- The website or blog

- For mobile apps

- For all devices – desktop and mobile

- Fr voice search

- For virtual reality

- For agents

- For vertical search engines such as Amazon and YouTube for example

The growth of AI on the Internet had necessitated the need for technical SEO experts and data scientists to help create the best user experience.

This is the time that you should act.

It is time that you did some technical SEO on your website or blog if you want to succeed across all platforms and devices.

The following is a step-by-step guide that will show you how you can achieve technical SEO success for your website or blog.

Section 2 – How to perform Technical SEO – 15 tips

You have now used keyword research and come up with valuable content on your website or blog, but SEO does not stop there.

You must make sure that the content you have created can not only be read by humans, but by the search engines too.

Having a deep technical understanding is not critical to this process, but you must have a grasp on the functions of the technical assets, so you can leverage them properly when performing technical SEO on your blog or website.

It is important that you speak the language that developers use since you will need them to help you perform some of the technical SEO on your blog or website.

If you cannot communicate properly on a technical level, you cannot perform technical SEO on your site, even with the help of a developer.

When you can speak the same technical language, you are better equipped to unravel the mystery that blocks the achievement of the proper technical optimization of your site.

Tip – You need cross-team support to achieve success

It is crucial that you have a good relationship with your developers to solve any technical SEO obstacles experienced on both sides.

You don’t have to wait until a technical matter crashes your SEO rankings. Always engage a developer right from the planning stage so that you avoid these obstacles. It could cost you more money and time at a later date.

Apart from having cross-team support from a developer, you need to understand technical SEO so you ensure that your website or blog is structured for both humans and search engine spiders.

It is important that you clearly understand how to go about this since the technical structure of your blog or website can have a huge effect on your performance on the SERPs.

- Optimize the page speed

If there is a technical issue that delays the loading of a page, the delay may cause a user to stop accessing it and move on to another blog or website.

Think With Google did a study and discovered that any loading time that takes more than 5 seconds increases the chances of users moving from the site.

Take a look at what happens when your page delays for the given times:

- A 3-second delay will increase the probability of bounce by 32%

- A 5-second delay will increase the probability of bounce by 90%

- A 6-second delay will increase the probability of bounce by 106%

- A 10-second delay will increase the probability of bounce by 123%

This is why improving your page loading speed is one of the technical optimization tasks that you should perform on your blog or website.

The page loading speed is linked to the internal structure such as organization code, hosting server, and image sizes.

Start by checking on the speed at which your pages are loading.

You can use Google to check the loading speed of your blog or website.

They have a tool called PageSpeed Insights, which gives you a score for the upload speed and also shows you the factors that you need to address in order to make the pages load faster.

Another tool that you can use is called GTmetrix. The tool shows you the actual loading speed, and not just a score, as well as pointing out the features that you can address to improve the speed.

There are some common issues that reduce page speed as you might see when you test your blog or website. Some of these are:

- The image size – smaller image sizes load faster

- The files sent by the server – enable compression or caching of these files so they load faster.

- The code used – You need to check your code and remove any bloat characters from the HTML, CSS, and JavaScript.

- Use Accelerated Mobile Pages (AMP).

- Make your site mobile-friendly

For a long time, Google has had a primary focus on making the browsing experience great for mobile users.

After mobile devices and tablets started being used as the primary devices for Internet access, Google realized theta mobile was the future of search.

This is why they have made a lot of effort in making mobile search more efficient.

In 2015, Google introduced an algorithm update that favored mobile-friendly websites, and the responsiveness of a blog or website became a ranking factor.

The next step that they took was adopting the mobile-first index.

This index started prioritizing the mobile versions of blogs and websites because the searches that came from mobile devices had already overtaken those that came from desktop devices.

Basically, you are already aware that having a responsive site will improve your SEO.

Now you need to know about the technical optimization tasks that you should undertake to improve the indexing of your blog or website and the ultimate rank of your pages.

The best way to go about this is to design a blog or website that is responsive.

The responsive feature allows your blog or website to be displayed properly on any device, mobile or desktop.

The HTML code does not change nor does the URL. However, the CSS code enables the website to render properly irrespective of the screen size of the device.

In order to enable the Google search spiders to recognize the responsiveness of your pages, you must add the “viewport” tag to the header code.

This is the tag that will direct the browser to adjust the page dimensions to fit into those of the screen of the device you are using.

If the tag cannot be found, the browser tries to adapt the page as best as it can, which leads to small text, squashed links, and make the whole experience terrible for users.

As you know, a bad user experience affects the ranking of your pages, which is a negative addition to the fact that your site or blog is not responsive.

Today, most of the online tools that you use for creating websites and blogs, such as Shopify, Wix, or WordPress, are able to create responsive pages, without the need for expert coding.

However, you should always test that your website is mobile-friendly. Google has gone ahead and provided a free tool that you can use to test the responsiveness of your blog or website.

The tool is called the Google Mobile Compatibility test.

When you use it, it will evaluate the responsiveness of your website or blog, and then you can act on the report that it gives you to make improvements.

- Track and fix errors

There is no way that you can think about getting your website or blog to feature high on the SERPs, without ensuring that the site does not have any coding errors.

The first thing that the search algorithms check on is the code of your website, and if it has any errors, then it cannot rank highly.

This means that you should actively check and see if Google is able to track your pages.

Start by submitting a sitemap, which tells Google how to crawl your blog or website.

However, tracking your errors does not end with submitting a sitemap. The search engine spiders can come across an error that is not addressed by the sitemap and therefore be unable to crawl your website or blog.

In order to see which errors are hindering the tracking and indexing of your pages, use the Index Coverage Status Report, which you can get in the Google Search Console.

Simply go into the console add your URL and then let the tool run.

The report will show you the pages that have been indexed and which ones have errors.

There are several reasons why your website or blog may not be indexed properly. Some of them are:

- Redirection errors such as loop redirect

- Having a robots.txt file that blocks the crawlers

- Having a “noindex” tag in the coding of the page also stops the crawlers from indexing your pages

- Server errors

- An error 404 refers to a URL that does not exist.

You must go through each URL displayed as having an error and then fix it manually.

Take note that such errors may be costing you valuable visits from people who are incentivized to do business with you, but cannot get access to your pages since they are not being indexed.

- Optimize the site architecture

It is your duty to ensure that you facilitate Google’s task in tracking your blog or website.

The best way to do this is to direct the search engine spiders to the paths they should follow to understand the hierarchy between internal links and the pages they point to.

This is why you need to pay a lot of attention to the site architecture based on the hierarchy and categories of your blog or website.

This is especially crucial if your site has many pages which need a clear organization.

Having a great site architecture boosts the factors that influence crawling and indexing and also helps Google in ranking your page.

Some of the factors are:

- Proper formatting of URLs – make them friendly and easy to crawl (short URLs are the best).

- Having a sitemap – this helps the search engine spider crawl your site better.

- Internal linking – this directs Google on which pages have more authority on the site

When you optimize the site architecture, you aid Google in understanding the contents contained therein, undertake a full scan, and enable the structure to be easy for the user to navigate through.

- Optimize images

There is a lot of visual power in images.

They are not simply the pretty aspects of the website or blog.

They are necessary to delight your visitors and incentivize them so that they can perform the desired actions and meet the objective of the page.

However, there is still a lot of optimization work that you must do behind the scenes. You must make sure that they meet their objective without affecting the user experience and page loading speeds of the site.

You may not think that there are a lot of technical optimization tasks that are required when you add images to your site.

The information that you add will help Google identify the image because the search engine crawlers are not able to read the information on the image.

Let us take a look at the main aspect of optimizing images that you need to know so that you can improve the page SEO and also how it will perform on the Google Images search engine.

Filename

This is the primary element that you have to optimize in the technical SEO of images. This is the name that you give to the image before you upload it to your server.

The name should be friendly and descriptive so that Google can understand it better and know what it represents.

For instance, it is better to name an image as red-car.jpg, rather than IMG657.jpg. This way Google knows that the image is that of a red car and will display it when a user looks for a red car in the Google Images search engine.

Alt text

This is the text that you see when you hover your mouse over an image. The text is also displayed when an image fails to load, possibly due to a slow Internet connection.

It also improves accessibility for people who have special needs, such as those who are color blind, given the example of the red car that we used above.

The alt text will also have SEO ramifications since it will help Google know what the image contains. This is similar to the way the file name serves the same purpose.

File size

The size of the image affects the speed at which your pages load. When you have small-sized images, the page loads faster and this increases the ranking of the whole site, and also that of the image itself – Google gives ranking priority to images with a small size.

There are several tools that you can use to reduce the file size of an image without affecting its quality. These tools can also remove unnecessary information from the image, which helps in reducing the size.

Tinypng is one of the most popular free online tools that you can use to achieve this objective.

File format

There are several other file formats, apart from JPG and PNG that have better compressions and perform better on the Internet. These are formats like JPEG XR, WebP, and JPEG 2000.

Try and use these formats when uploading images to your blog or website.

Image dimensions

The best way to upload images is to make sure that they are in the dimensions that you want them to be displayed in.

This stops the browser from resizing images, which will take up a lot of resources and space, and reduce the speed at which your pages load.

Optimize uploading

This refers to images that may be out of the screen when a page loads.

There is a need to postpone their loading until the user reaches them

If you create a site on WordPress, you can use the Lazy Load plugin, which stops images from loading if they are out of the screen and only loads them when the user scrolls down to where they are.

- Remove duplicate content

Removing duplicate content is another crucial technical optimization task that you must perform. It does have a lot of impact on the ranking of your page.

When talking about duplicate content, you should know we are referring to both text and images that you may have copied from other websites. It also refers to text and images that you repeat within your own website or blog, without knowing it.

It is simple to remove duplicate content that is copied from other sites. You just have to make sure that the content you use as a reference is written in your own words.

Copying content from another site is also known as plagiarism, which is an act that is heavily frowned upon. It will cause your site to be penalized heavily so you must avoid it at all costs.

Plagiarism is also considered to be a copyright crime that can have serious legal ramifications for your business.

Removing duplicate content from your internal pages can be taxing at times, and it requires some technical know-how.

One common example of this happens when people update a page and then create a new URL for it without deleting the old page.

Another example occurs when you have two almost similar URLs pointed at the same page:

- https://www.yourwebsite.com/page/

- https://www.yourwebsite.com/page

The second one does not have a stroke at the end and is, therefore, a different URL, but it still points to the same page.

This is bad because when Google realizes that you have duplicate content on your website, it will prioritize the ranking of the original content, which means that the old pages will be ranked first above the updated pages.

At times, the search engine spiders do not prioritize the older pages and simply filter both pages negatively.

In order to check if you have duplicate content on your blog or website, you can use a handy tool such as Siteliner.

The tool will show you the number of duplicate pages you have and provides you with their URLs so you can edit them quickly.

Once you have identified the pages that have duplicate content, you can direct Google as to which of the two is preferred over the other.

This is where the canonical tag comes in, and you should place it on the main page of the blog or website.

You can also use the redirect 301 feature to send users and search engine spiders to the page which you want to have authority over the other one.

This is the best way to stop the pages from competing against one another for ranking and the most recent one is ranked alone, while the other is completely ignored.

- Create structured data

By this time, it has dawned on your that Google prefers organized sites, right?

Organizing your website or blog makes it easier for the search engine spiders to understand your pages and decide which path they should follow when scanning your site.

When you create structured data, you assist these crawlers to achieve this task.

The function of structured data is to create markups within the code of the page, which guide the crawlers on various aspects of the page content.

Basically, structured data describes the site to the search engine spiders.

Apart from assisting in crawling and indexing the site, markups are also used when displaying search results.

One of the main functions of structured data is the creation of rich snippets. These are then shown on the search engines.

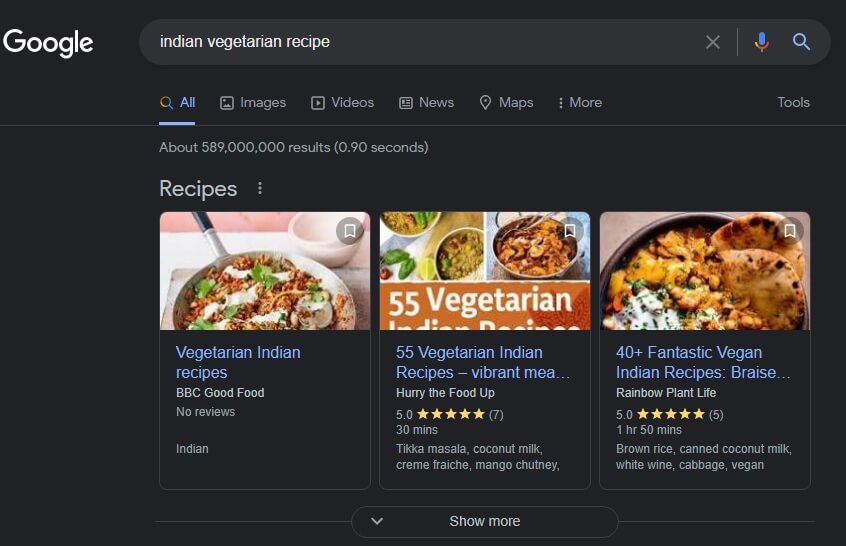

Here is an example of when we searched for an Indian vegetarian recipe.

From the image above, you can see that the latter two results have information provided at the bottom, which includes the rating of the recipes, how many people rated the recipes, the ingredients of the recipes, etc.

This information is missing from the first result, probably because the owner did not use structured data in the code of the page.

This type of information can also be shown on other kinds of content, such as video, local business search, and product results.

The structured data not only helps Google to understand the content of the page but also gives rich information to users, so they can make informed decisions on whether they should click through to the website or not – those with rich snippets will probably have more clicks.

Structured data is not only about showing rich snippets. It also acts as breadcrumbs that show the path that a user has taken in order to reach that page.

This is information that you can also use on the SERPs.

Structured data can also be used to divide a page and help Google understand what each section is about.

For example, you can insert your contact information on a page, but that alone will not help Google understand what that contact section is about.

You can make it easier for Google to understand the contact information by creating a markup within the code.

This is what is known as Schema data and it requires you to have some technical knowledge in coding a website.

You may also use the Schema App Structured Data plugin when you want to do this in WordPress.

Google has also come up with a tool that helps developers and other SEO practitioners in creating structured data for a website, without having to result to Black Hat techniques.

The Structured Data Markup Helper was created by Google to help you do this with ease.

After you have created structured data for your blog or website, you can use the Structured Data Testing Tool to see if everything went as you expected.

The result that you get displays exactly how Google is crawling your pages, and if there are any errors or problems that the crawlers are coming across.

- Check your sitemap

We mentioned sitemaps above when we were discussing the issues of a site’s architecture. These guide the Google crawlers on the link structure of the site.

Let us take a look at the finer details of this feature which is crucial for technical SEO.

A sitemap is an XML file that shows all the pages and documents found in the bog pr website, and also lays out the connections between them.

When you submit this file to Google, it is able to see which pages it will crawl and which are the most important – the hierarchy.

Website and blogs that are very big or have isolated pages benefit a lot from having a sitemap. In this way, the sitemap ensures that all your pages are tracked and indexed b the search engine spiders.

There are various ways in which you can submit a sitemap to Google.

You can quickly do this through the Google Search Console, which is very specific about sitemap reporting.

However, if you have the technical know-how, you can easily guide Google to the path where you have added the ronots.txt file or use a ping function to enable Google to request the sitemap for tracking.

Read this detailed Google tutorial to learn how you can submit your sitemap properly.

- Fix Error 404

You can imagine the frustration when a reader enters a search on Google search, comes across your website or blog, finds that it has the exact information he or she needs, and is then greeted with an Error 404 after clicking on the search results.

This would not augur well for the dependability of your website and the reader may never click back whenever he or she comes across your website or blog in the SERPs again.

Google also understands the frustration of error 404 pages and penalizes websites and blogs that lead to the error.

Error 404 is a server response to a search request.

When it is displayed, it indicates that the user came across a URL, clicked on it but the browser was not able to communicate with the server to display the requested page.

This normally happens when the URL has been changed or the browser tries to access an old URL.

You can use the Redirect 301 feature in order to stop the error 404 from occurring. The redirect will take the user to the correct URL.

Sometimes the error 404 messages can be displayed even when the URL requested is the correct one.

This happens when there is a typo within the URL.

In order to prevent a user from leaving the site in this case, you can develop a custom error page that will suggest the correct spelling of the URL, or suggest other paths that the user can use.

There are several technical SEO tools that you can use to find pages that have an error 404 and correct them.

Some of these tools are:

- Dead Link Checker

- Screaming Frog

- Google Search Console

Fix availability errors

This is another common and frustrating error that many users encounter.

This error is shown when the site is not available.

In this case, there is no error page – the site simply refuses to load.

What makes this error very bad is the fact that Google too will not be able to find the website or blog in order to rank it.

If this error occurs several times, then Google assumes that the website or blog no longer exists and will be removed from the SERPs.

This means that you will no longer appear on the results, and this can really harm your business.

This is the reason why you need to fix any availability errors that your website or blog is experiencing.

This problem is not caused by anything you have done on your side, but an error coming from your hosting company.

You should contact the hosting company and tell them to fix the errors.

When you are purchasing a hosting package, make sure that there is a Service Level Agreement (SLA), which is a legal agreement that shows the uptime that the hosting company will give to your website or blog.

The infrastructure of these companies is designed so that they can operate 24 hours a day and 365days a year.

However, there are times when the software and hardware of the hosting company fail.

Upgrades and maintenance tasks can sometimes lead to downtime and your site will not be available.

However, you should always keep an eye on how much uptime the hosting provider gives you and also check your site to ensure that the terms set out in the SLA are met.

- Check for browser compatibility

It makes proper SEO sense to ensure that your website or blog is compatible with a wide range of browsers.

Today, many people use Google Chrome or Safari when browsing the Internet,

There are users who also use lesser-known mobile browsers like the Phoenix Browser, while others use Internet Explorer.

Each of these browsers read websites and blogs differently, and some features on your website may be excluded from some of them.

For example, if someone is using an old browser, there are features that are compatible with newer browsers that will not display properly, or are not supported.

Therefore, you should always be on the lookout for browser compatibility limitations, and perform technical SEO to address these issues.

This is especially true if some of your users are browsing the Internet with older browsers.

- Fix security issues

Google also considers the security of a website or blog when it comes to ranking.

This is an effort to protect users from falling into fraudulent activities or having their personal information stolen.

For instance, in the year 2014, Google announced that the use of the Secure Sockets Layer (SSL) protection will be a ranking factor. This means that your website or blog should have the HTTPS prefix rather than the old HTTP.

The reason they made it a ranking factor is purely to protect users from the prying eyes of hackers, who had become a nuisance when it came to stealing personal information online, and to protect users from accessing spam content.

When a user browses through a site with an HTTPS protocol, he or she has peace of mind that the information they are sharing is encrypted and cannot be hacked easily.

This is especially crucial in payment gateways and subscription pages.

When a user has to complete a payment, the HTTPS protocol serves as a reminder that their information is protected – if you do not have SSL protection, then people will not buy from your site and you will lose a lot of revenue.

When you are hosting your website or blog, you should pay for SSL certification. Some hosting companies make this part of their standard packages.

If you are migrating from the HTTP protocol to HTTPS, you should check your website or blog to make sure that all features have maintained their functionality. Test this before you make the change.

The URL of the pages will also change, so you may go ahead and use the canonical tags we talked about before to avoid penalization from having duplicate pages. These tags will also serve to show Google which pages should be ranked first.

Migrating from HTTP to HTTPS is a complex issue and it normally leads to complications for your website or blog.

This is why you should let technical professionals do it for you.

There are hosting companies that offer to do the migration on your behalf to ensure that everything works as it should.

- Optimize your site for international audiences

If you have a blog or website that targets people in other countries, who may speak a different language, then you need to do some technical SEO to give them the best user experience possible.

Without this optimization, international users can have difficulty finding information on your site that caters to their needs.

There are two main ways in which you can optimize your site for international users:

Language

Multilingual websites are those that are targeted at people who use different languages in other geographical regions.

You must include the “hreflang” tag which indicates that your page has a version that is targeted at other language speakers.

Country

Multi-regional sites are those that are targeted at people living in different countries, and they should have a URL structure that is easy to target their main domain or some of their pages to specific countries.

You can do this by using the country code top-level domain (ccTLD) such as “.in” for India.

You can also use a generic top-level domain (gTLD) such as “yourwebsite.com/in for India.

- Use canonicalization

We have briefly mentioned canonicalization in the steps above and shown that it is used to direct Google on which pages it should index and which ones to ignore.

This is an important technical SEO subject that deserves a better examination.

When you update a page, Google sometimes does not know which page it should index, especially if you have used a new URL.

This is how the rel=”canonical” tag came into being.

With this tag, the search engines are better informed on the pages to index.

The canonicalization process shows the search engine spiders where the original and master version of the content piece is located.

Basically, what you are doing is telling the search engine, “Hey Big Google, do not index this page, but index the source page here, instead”.

Now when you create an update, you will not run the risk of creating duplicate content, which could affect your ranking.

Where do you place the canonicalization tag on a web page’s code?

In order to avoid mistakes when indexing your site, you should place the canonicalization tag on ALL your website or blog pages.

For instance, if you have a site with “https://www.yourwebsite.com” and this is the URL that you want to use, then it will be indexed separately from the URL “https://yourwebsite.com”.

If you have ever come across a website that uses these versions of their URLs to access the same page, then Google considers these pages as duplicate content and will affect the rank of that particular site.

Don’t fall into the same trap. Use canonicalization!

TIP

You should tell the difference between content penalties and content filtering

As you saw in the duplicate content section above, there is nothing like getting penalized for having duplicate content on your website.

However, you should avoid having duplicate content due to filtering issues that will affect your ranking on the SERPs.

When you have duplicate content and it affects your ranking, you are not being penalized by the search engine spiders. Instead, they are “confused” about which content you want indexed and displayed, and this “confusion” leads to your website or blog being ranked lower.

- Register for Webmaster Tools

You should not forget to register for webmaster tools. These are tools such as Bing Webmaster Tools and Google Console.

We have mentioned Google Console several times in this article, but you have to register first in order to use it.

Registering for webmaster tools is not only crucial to technical SEO but also to other optimization tasks. These tools quickly show you what issues are affecting your ranking so you can correct them for better performance.

Another reason why you should register for webmaster tools is so you can submit your sitemap.

Google is also launching Core Web Vitals in the search console. These are already being used for ranking purposes and you should be aware of these vitals too.

The Core Web Vitals reports show you information on what you should improve in order to meet their threshold.

For instance, they can show you information about your mobile page experience once you meet their threshold for mobile traffic.

When you register for webmaster tools you will be able to access the “Coverage” report, which helps in auditing your site and showing each and every issue that is affecting your ranking.

As you can see, registering for webmaster tools gives you access to tools that can work very well in boosting your SEO performance across all your pages.

In conclusion

You do not have to be a technical whiz in order to perform technical SEO on your website or blog. There are several amazing tools that you can use to achieve this purpose even with minimum technical knowledge – we have mentioned a few in this article.

However, even when you have these tools, it can still be challenging to understand the fine balance between the various arms of SEO, when you should use them, and when you should not.

The topic of search engine optimization is very wide and sometimes you can get lost wondering which ones you should prioritize over others.

You are not alone, and this is why you should sometimes outsource some of these functions to SEO agencies and teams which are better equipped to handle the various aspects of the complete search optimization package.

Experimentation is one way in which you can figure out which is the best mix between technical SEO, Content SEO, and other optimization techniques available for better ranking.

For example, if you want to use rich media on your blog or website, you may have to sacrifice page loading speeds, since it would provide more value than if you removed the media and had a fast-loading site that consists entirely of text.

Experiment and share what your results are with us in the comments section below.